Installing a Patroni cluster on Ubuntu

So I've recently been quizzed on the exact process I use to install our Postgres cluster, and after telling everyone how easy it was, I was asked to explain the process.

It's not procrastination, it's something else... I swear!

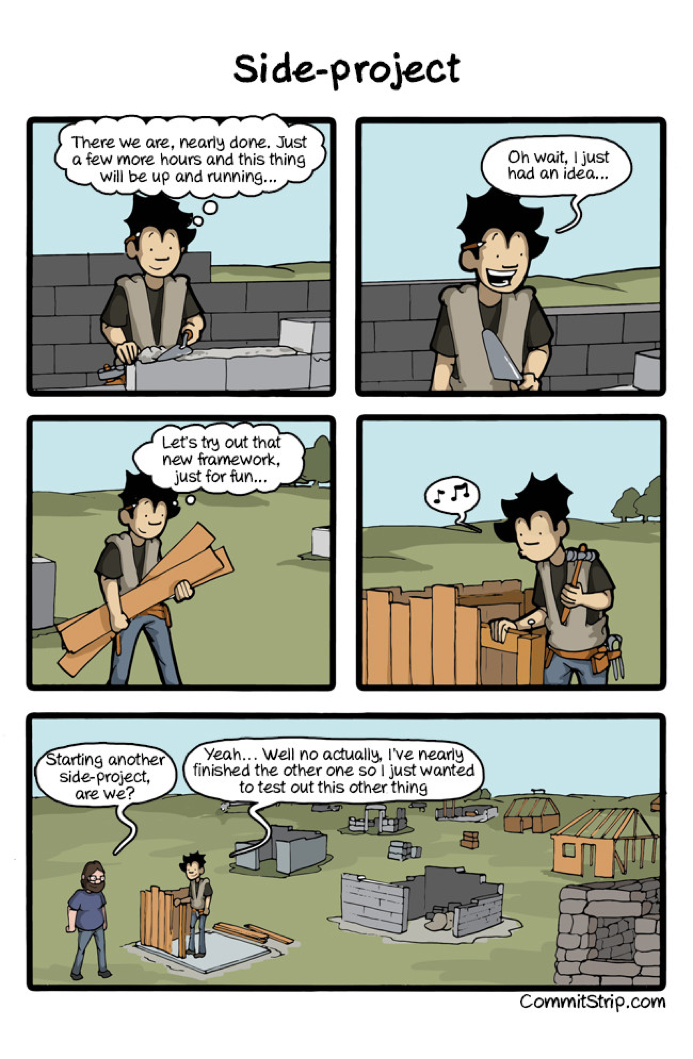

Unfortunately I just have a bunch of embarrassing text files full of commands to run, so I decided it's about time I document it. Perfect opportunity to install that federated personal blog I've been thinking about for 6 months! Except it doesn't work quite the way I want it to and doesn't support images or profile avatars... Perfect opportunity to write an image hosting service that I can integrate with the blog software! Except it doesn't count likes or show replies with my post... Perfect opportunity to add the bits and pieces needed to track federated likes and replies! Except now how do I let people who are seeing my blog post make likes and replies on their home instances? Perfect opportunity to research URI scheme protocol handlers and redirector sites! Except none of them work how I want them to work... Perfect opportunity to design a new way to implement the draft protocol as a proof of concept/bootstrap project! Except I promised to finish this article on the Patroni cluster thing by tonight.

Argh... it's tough living with my brain, I now have another 3 unfinished projects. I don't know how Ada puts up with it... she showed me this picture...

I hate that I resemble this so much.

I hate that I resemble this so much.

Okay, so installing Patroni is really easy, writing about it requires much more effort.

What is Patroni?

This is a really good question and it gets to the heart of the matter. Patroni is a Postgres cluster manager. You give it a bunch of hosts and tell it to run a Postgres cluster on them, and it manages the whole thing. Kinda like how a RAID controller manages an array of (not-so-inexpensive) disks. If there's no database there, it'll start it up, join the cluster, download a copy of the data, and begin replication. If there's a database there but it gets wrecked, it'll automatically rebuild it for you. We'll also install useful other bits of software like HAProxy which points a certain port at the current primary and another port at the secondary. If you've ever had the joy of using any of the many Kubernetes operators for Postgres, it's like that, but for bare metal. When you're working on a budget, sometimes you can't afford the overhead of Kubernetes. If this was a project for a client, I'd probably steer them towards the self-healing nature of a Kubernetes cluster + operator.

Installing

I'm going to take you through the process of setting up a brand new cluster of 3 servers. I've got these servers on an internal network: – db-cluster-1 10.19.96.3 – db-cluster-2 10.19.96.4 – db-cluster-3 10.19.96.5

Run on all 3 hosts:

As we're running Ubuntu, I'm going to do a quick upgrade on them to get all the latest updates first.

apt update && apt upgrade -y && reboot

Now it's time to make sure all the hosts know how to contact each other, even if/when DNS goes down.

cat <<EOF >> /etc/hosts

# Database hosts

10.19.96.3 db-cluster-1

10.19.96.4 db-cluster-2

10.19.96.5 db-cluster-3

EOF

for i in 1 2 3; do

ping -c 1 db-cluster-$i

done

If you're running a firewall (hopefully you are), let's open up some ports well need:

for p in 2379 2380 8008; do

ufw allow in proto tcp from 10.19.96.0/20 to any port $p

done

for p in 5000 5001; do

ufw allow in proto tcp from any to any port $p

done

ufw status

NOTE: If you're putting a load balancer in front, you'll probably want to lock down the port 5000/5001 to just it, or otherwise just your application's network.

Let's now get Percona rocking. Percona is a Postgres database with extra optimizations, this is what we will control with our Patroni controller to turn into a cluster.

curl -O https://repo.percona.com/apt/percona-release_latest.generic_all.deb

apt install -y gnupg2 lsb-release ./percona-release_latest.generic_all.deb

apt update

percona-release setup ppg-16

Now it's time to install our HAProxy software and configure it. This config will setup a proxy with 3 ports, the stats at port 7000, the primary at port 5000 and the standbys at port 5001.

The standbys will use the health check on http://localhost:8008/replica which will return a 200 OK if that server is currently a replica node.

If there are no replicas available, then it falls back to any available node (even the primary) to service standby nodes as a fail safe so your applications that are configured to talk to a read node don't get locked out.

apt install -y percona-haproxy

cat <<EOF > /etc/haproxy/haproxy.cfg

global

maxconn 500

defaults

log global

mode tcp

retries 2

timeout client 30m

timeout connect 4s

timeout server 30m

timeout check 5s

listen stats

mode http

bind *:7000

stats enable

stats uri /

listen primary

bind *:5000

option httpchk /primary

http-check expect status 200

default-server inter 3s fall 3 rise 2 on-marked-down shutdown-sessions

server db-cluster-1 db-cluster-1:5432 maxconn 500 check port 8008

server db-cluster-2 db-cluster-2:5432 maxconn 500 check port 8008

server db-cluster-3 db-cluster-3:5432 maxconn 500 check port 8008

listen standbys

balance roundrobin

bind *:5001

option httpchk /replica

http-check expect status 200

default-server inter 3s fall 3 rise 2 on-marked-down shutdown-sessions

server db-cluster-1 db-cluster-1:5432 maxconn 500 check port 8008

server db-cluster-2 db-cluster-2:5432 maxconn 500 check port 8008

server db-cluster-3 db-cluster-3:5432 maxconn 500 check port 8008

server db-cluster-1-standby db-cluster-1:5432 maxconn 500 backup

server db-cluster-2-standby db-cluster-2:5432 maxconn 500 backup

server db-cluster-3-standby db-cluster-3:5432 maxconn 500 backup

EOF

systemctl restart haproxy

Now we're going to install all the fun bits, and immediately disable them since they'll need some configuring first.

apt install -y percona-patroni etcd etcd-server etcd-client percona-pgbackrest

systemctl stop {etcd,patroni,postgresql}

systemctl disable {etcd,patroni,postgresql}

Also we'll remove the existing (default) Postgres folder... if this is a new server this should not be a problem, if you've got an existing Postgres installed, what the hell are you thinking testing on this server!?

rm -rf /var/lib/postgresql/15/main

We need some token/passwords, so lets generate them now on any machine (even your own) run the following command (once):

cat <<EOF

# Run these commands on each server

export DATA_DIR=/opt/data

export ETCD_TOKEN=$(openssl rand -hex 16)

export REPLICATOR_PASS=$(openssl rand -hex 16)

export SUPERUSER_PASS=$(openssl rand -hex 16)

export HOST=\$(hostname -s)

export INTERNAL_IP=\$(getent hosts \$(hostname -s) | grep -v '^127.0.' | cut -d \ -f 1)

echo "I am \$HOST at \$INTERNAL_IP (please check)"

EOF

Copy the output of that and run it on all 3 servers.

We need to create a Patroni config now so our servers will be able to start up later:

cat <<EOF > /etc/patroni/patroni.yml

namespace: mycompany

scope: db-cluster

name: ${HOST}

restapi:

listen: 0.0.0.0:8008

connect_address: ${INTERNAL_IP}:8008

etcd3:

host: ${INTERNAL_IP}:2379

bootstrap:

# this section will be written into Etcd:/<namespace>/<scope>/config after initializing new cluster

dcs:

ttl: 30

loop_wait: 10

retry_timeout: 10

maximum_lag_on_failover: 1048576

postgresql:

use_pg_rewind: true

use_slots: true

parameters:

wal_level: replica

hot_standby: "on"

wal_keep_segments: 10

max_wal_senders: 5

max_replication_slots: 10

wal_log_hints: "on"

logging_collector: 'on'

max_wal_size: '10GB'

archive_mode: "on"

archive_timeout: 600s

archive_command: "cp -f %p ${DATA_DIR}/archived/%f"

recovery_conf:

restore_command: cp ${DATA_DIR}/archived/%f %p

# some desired options for 'initdb'

initdb: # Note: It needs to be a list (some options need values, others are switches)

- encoding: UTF8

- locale: en_US.UTF-8

- data-checksums

postgresql:

cluster_name: cluster_1

listen: 0.0.0.0:5432

connect_address: ${INTERNAL_IP}:5432

data_dir: ${DATA_DIR}/pg

bin_dir: /usr/lib/postgresql/16/bin

pgpass: /tmp/pgpass

authentication:

replication:

username: replicator

password: ${REPLICATOR_PASS}

superuser:

username: postgres

password: ${SUPERUSER_PASS}

parameters:

unix_socket_directories: "/var/run/postgresql/"

create_replica_methods:

- basebackup

basebackup:

checkpoint: 'fast'

pg_hba:

- local all all peer

- local replication all peer

- host replication replicator 127.0.0.1/32 trust

- host replication replicator 0.0.0.0/0 scram-sha-256

- host all all 0.0.0.0/0 scram-sha-256

- host all all ::0/0 scram-sha-256

watchdog:

mode: required # Allowed values: off, automatic, required

device: /dev/watchdog

safety_margin: 5

tags:

nofailover: false

noloadbalance: false

clonefrom: true

nosync: false

EOF

And we want to add a service to prep watchdog etc for Patroni.

cat <<EOF > /etc/systemd/system/patroni-prep.service

[Unit]

Description=Prepare system for Patroni (softdog, watchdog, postgresql dirs)

Before=patroni.service

DefaultDependencies=no

After=local-fs.target

[Service]

Type=oneshot

RemainAfterExit=yes

ExecStart=/bin/bash -c '/usr/sbin/modprobe softdog; chown postgres /dev/watchdog; mkdir -p /var/run/postgresql; chown postgres /var/run/postgresql'

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable patroni-prep

One host at a time now:

Up until now, we've been running commands on all hosts (possibly in parallel), but now we're getting to the bootstrap part where things start deviating.

db-cluster-1

This is our seed server that will initialize and create our cluster. We want to initialize our etcd server here:

cat <<EOF > /etc/etcd/etcd.conf.yaml

name: 'db-cluster-1'

initial-cluster-token: ${ETCD_TOKEN}

initial-cluster-state: new

initial-cluster: db-cluster-1=http://db-cluster-1:2380

data-dir: /var/lib/etcd

initial-advertise-peer-urls: http://db-cluster-1:2380

listen-peer-urls: http://0.0.0.0:2380

advertise-client-urls: http://db-cluster-1:2379

listen-client-urls: http://0.0.0.0:2379

# Storage quota (8GB = 8589934592 bytes)

quota-backend-bytes: 8589934592

# Enable automatic compaction every hour

auto-compaction-mode: periodic

auto-compaction-retention: "1h"

auto-defrag-interval: "24h"

EOF

sudo systemctl enable --now etcd

After a few seconds lets run these 2 commands:

etcdctl member list -w table

etcdctl endpoint status -w table

You should see something like this (one member, and it's the leader):

root@db-cluster-1:~# etcdctl member list -w fields

"ClusterID" : 8919193014159237326

"MemberID" : 17956751917787781469

"Revision" : 0

"RaftTerm" : 2

"ID" : 17956751917787781469

"Name" : "db-cluster-1"

"PeerURL" : "http://db-cluster-1:2380"

"ClientURL" : "http://db-cluster-1:2379"

"IsLearner" : false

root@db-cluster-1:~# etcdctl endpoint status -w fields

"ClusterID" : 8919193014159237326

"MemberID" : 17956751917787781469

"Revision" : 1

"RaftTerm" : 2

"Version" : "3.5.24"

"DBSize" : 20480

"Leader" : 17956751917787781469

"IsLearner" : false

"RaftIndex" : 4

"RaftTerm" : 2

"RaftAppliedIndex" : 4

"Errors" : []

"Endpoint" : "127.0.0.1:2379"

We're now going to add our next member to this cluster so when they start up, the server will know them.

etcdctl member add db-cluster-2 --peer-urls=http://db-cluster-2:2380

db-cluster-2

Lets now join our second etcd to this cluster...

cat <<EOF > /etc/etcd/etcd.conf.yaml

name: 'db-cluster-2'

initial-cluster-token: ${ETCD_TOKEN}

initial-cluster-state: existing

initial-cluster: db-cluster-1=http://db-cluster-1:2380,db-cluster-2=http://db-cluster-2:2380

data-dir: /var/lib/etcd

initial-advertise-peer-urls: http://db-cluster-2:2380

listen-peer-urls: http://0.0.0.0:2380

advertise-client-urls: http://db-cluster-2:2379

listen-client-urls: http://0.0.0.0:2379

# Storage quota (8GB = 8589934592 bytes)

quota-backend-bytes: 8589934592

# Enable automatic compaction every hour

auto-compaction-mode: periodic

auto-compaction-retention: "1h"

auto-defrag-interval: "24h"

EOF

sudo systemctl enable --now etcd

After a few seconds lets run these 2 commands:

etcdctl member list -w table

etcdctl endpoint status -w table

You should now see that there's 2 members, and presumably you're not the leader.

We're now going to add our final member to this cluster, from db-cluster-2

etcdctl member add db-cluster-3 --peer-urls=http://db-cluster-3:2380

db-cluster-3

Lets now join our third etcd to this cluster...

cat <<EOF > /etc/etcd/etcd.conf.yaml

name: 'db-cluster-3'

initial-cluster-token: ${ETCD_TOKEN}

initial-cluster-state: existing

initial-cluster: db-cluster-1=http://db-cluster-1:2380,db-cluster-2=http://db-cluster-2:2380,db-cluster-3=http://db-cluster-3:2380

data-dir: /var/lib/etcd

initial-advertise-peer-urls: http://db-cluster-3:2380

listen-peer-urls: http://0.0.0.0:2380

advertise-client-urls: http://db-cluster-3:2379

listen-client-urls: http://0.0.0.0:2379

# Storage quota (8GB = 8589934592 bytes)

quota-backend-bytes: 8589934592

# Enable automatic compaction every hour

auto-compaction-mode: periodic

auto-compaction-retention: "1h"

auto-defrag-interval: "24h"

EOF

sudo systemctl enable --now etcd

After a few seconds lets run these 2 commands:

etcdctl member list -w table

etcdctl endpoint status -w table

By this stage you should know what to expect.

db-cluster-1

Back on the first node, it's time to start up the very first Patroni instance (This will initialize the base database tables etc, and make the server the stand-alone primary).

mkdir -p ${DATA_DIR}/archived

chown -R postgres:postgres ${DATA_DIR}

systemctl enable --now patroni

journalctl -f -u patroni

Wait for journal to say something like no action. I am (db-cluster-1), the leader with the lock, then exit and run the following command:

patronictl -c /etc/patroni/patroni.yml list

You should get something that looks like:

+ Cluster: db-cluster (7593587474928070257) ---+----+-------------+-----+------------+-----+

| Member | Host | Role | State | TL | Receive LSN | Lag | Replay LSN | Lag |

+--------------+------------+--------+---------+----+-------------+-----+------------+-----+

| db-cluster-1 | 10.19.96.3 | Leader | running | 1 | | | | |

+--------------+------------+--------+---------+----+-------------+-----+------------+-----+

db-cluster-2

Run:

mkdir -p ${DATA_DIR}/archived

chown -R postgres:postgres ${DATA_DIR}

systemctl enable --now patroni

journalctl -f -u patroni

Wait for I am (db-cluster-2), a secondary, and following a leader. You can go back to the first server and watch patronictl list command to see progress.

db-cluster-3

Do the same as for db-cluster-2

Testing the connection

root@db-cluster-3:~# PGPASSWORD=$SUPERUSER_PASS psql -h db-cluster-2 -p 5000 -U postgres \

> -c 'SELECT CURRENT_USER user, inet_server_addr() ip, inet_server_port() port;'

user | ip | port

----------+------------+------

postgres | 10.19.96.3 | 5432

(1 row)

root@db-cluster-3:~# PGPASSWORD=$SUPERUSER_PASS psql -h db-cluster-2 -p 5001 -U postgres \

> -c 'SELECT CURRENT_USER user, inet_server_addr() ip, inet_server_port() port;'

user | ip | port

----------+------------+------

postgres | 10.19.96.5 | 5432

(1 row)

Job done

That's pretty much it. From any server in the cluster you can manage the cluster using the patronictl command.